Recently, several targeted LoRA trainings were done using lora-scripts.

LoRA Train Repo: https://github.com/Akegarasu/lora-scripts

CPU model: 13th Gen Intel(R) Core(TM) i9-13900K

Graphics card: NVIDIA RTX 4090 24G

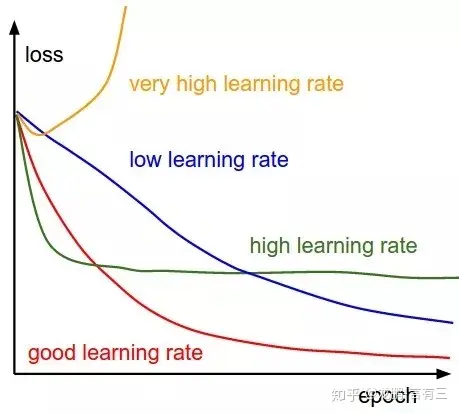

First of all, let me talk about the training summary. The more training steps, the more the actual results deviate from the prototype.

Common training parameters:

model_train_type = "sd-lora"

resolution = "512,768"

max_train_epochs = 10_000

train_batch_size = 8

learning_rate = 0.0002

unet_lr = 0.0002

text_encoder_lr = 0.00002

lr_scheduler = "constant"

lr_warmup_steps = 0

optimizer_type = "AdaFactor"

min_snr_gamma = 5

# network

network_module = "networks.lora"

network_dim = 64

network_alpha = 32

# dropout tag

caption_dropout_rate = 0.005

caption_dropout_every_n_epochs = 10

caption_tag_dropout_rate = 0.002

# noise

multires_noise_iterations = 6

multires_noise_discount = 0.3

# speed

mixed_precision = "fp16"

full_fp16 = true

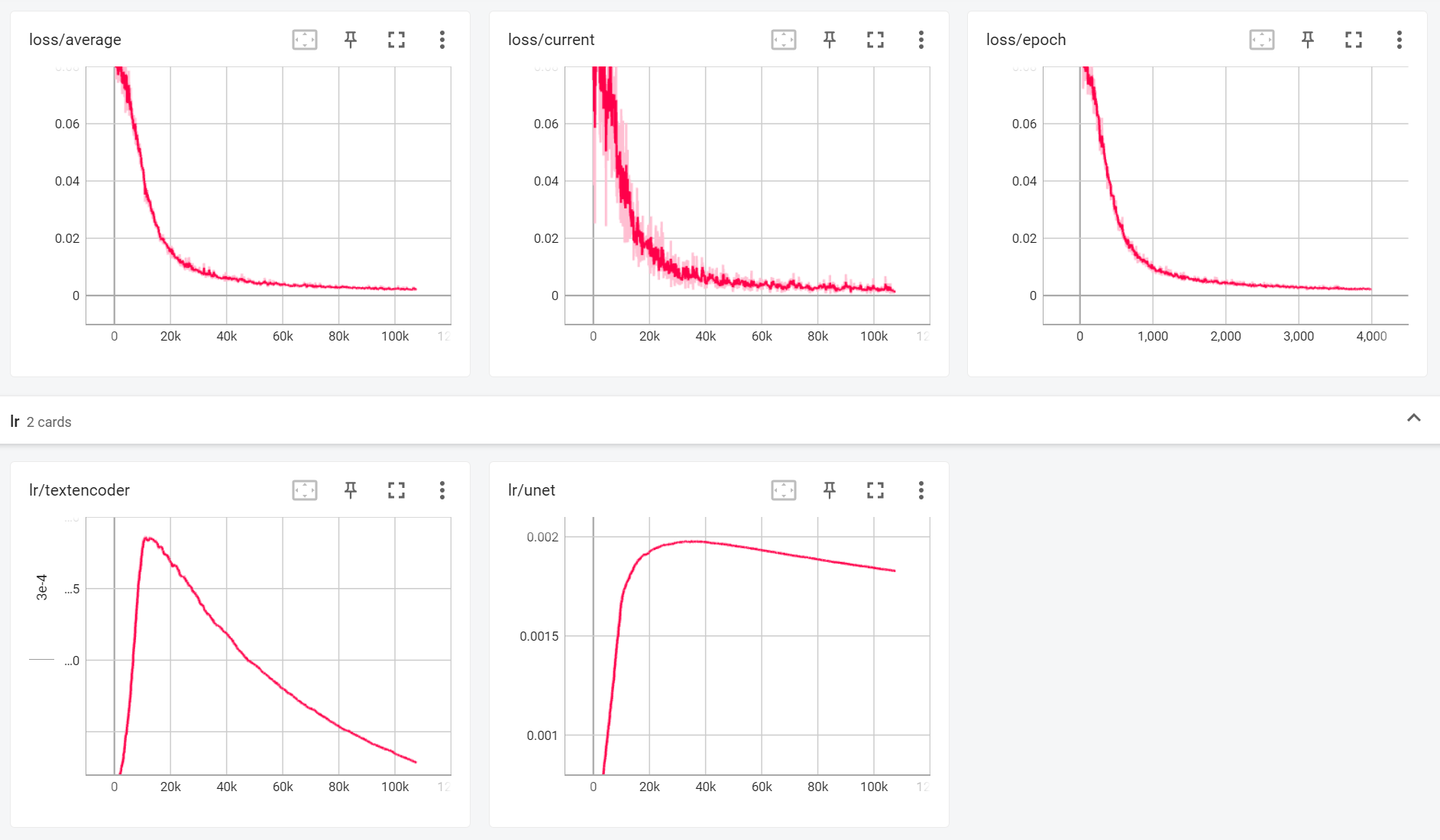

Learning quality:

TensorBoard[Data dashboard]:

About 3960 epoch of painting: